We have previously discussed the power of the Plan-Do-Study-Act (PDSA) cycle in bringing about collaborative, sustainable improvement. We have also emphasised the importance of allowing students to play a key role – giving students a ‘real voice’ – in improving their school and classroom. In this blog, we share another example. This time, how the PDSA process was used by a teacher and students to improve learning and engagement in their physical education (PE) classroom. (You can also view this as a QLA case study video.)

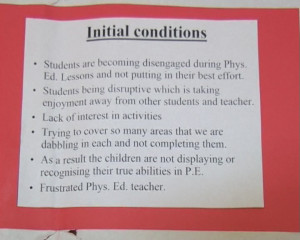

Chris is a leading specialist teacher at a Victorian primary school. She observed the school’s Year 6 students becoming increasingly disengaged during their weekly PE lessons. PE teachers were stressed and student behaviour was worsening. No one was enjoying PE!

Chris decided it was time to set students and teachers to work to improve PE by applying the PDSA cycle.

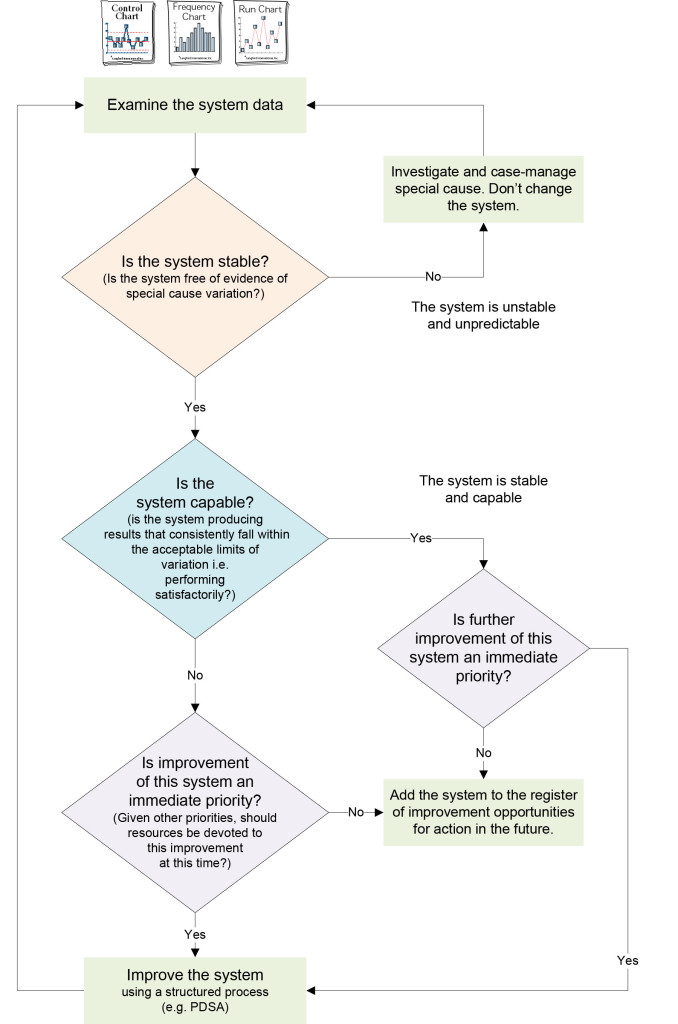

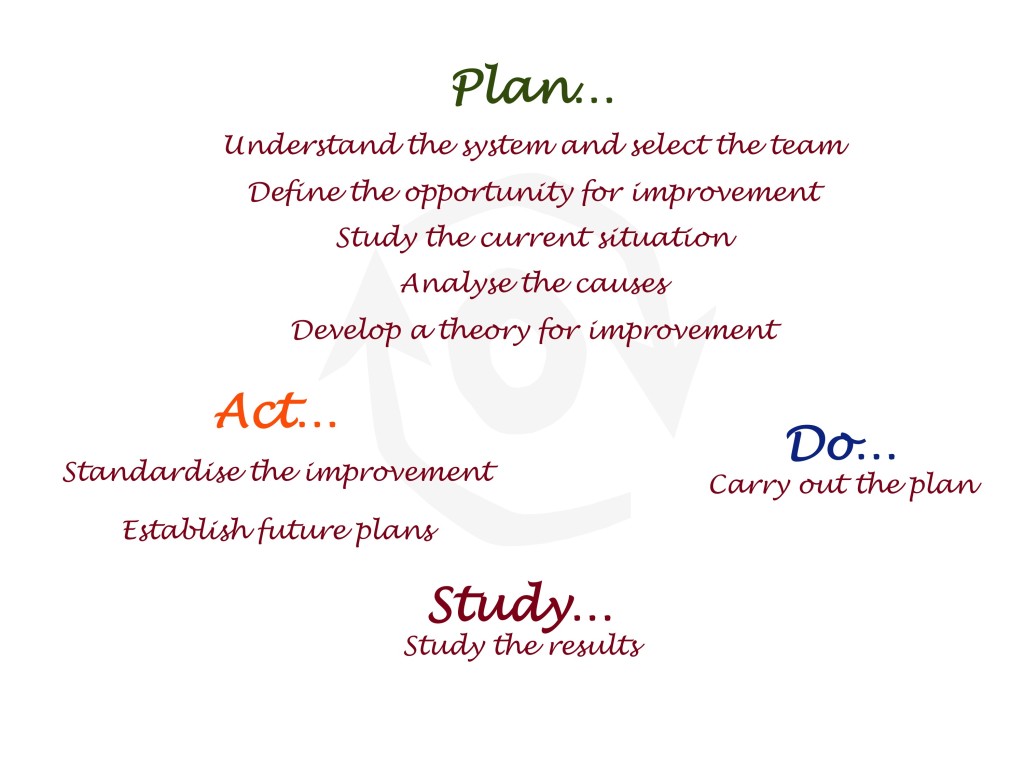

As we have seen previously:

PDSA is a highly effective improvement approach, based upon a cycle of theory, prediction, observation, and reflection.

It involves applying a structured process to achieve sustainable improvement.

This includes:

- defining the opportunity for improvement by agreeing the purpose and establishing a shared vision of excellence

- focusing improvement efforts on a system or process (rather than blaming individuals)

- identifying root causes not symptoms

- developing and applying a theory for improvement

- reflecting on the outcomes achieved to agree a new ‘best method’ or further improvement needed.

Here’s how…

Chris applied the PDSA process with her students. They documented a comprehensive storyboard to capture their agreements, the data collected, and to reflect their progress in applying the PDSA process.

Here’s what they did:

- Students and teachers discussed to agree the opportunity for improvement – to improve their PE classes.

- They studied the current situation – what did PE currently look like, feel like, and what was going on? They agreed: students were disengaged, disinterested and not putting in their best efforts; some students were disrupting the class, preventing others from enjoying PE; and teachers were frustrated.

PDSA storyboard extract: brainstorm of the current situation in PE - They collected data to measure the extent of the dissatisfaction with PE. A correlation chart was used to measure student

PDSA storyboard extract: collecting data using a correlation chart – how much are students enjoying and learning in PE? perception. The data revealed low levels of student enjoyment

(fun) and learning in the PE classroom. - Students then brainstormed and themed the drivers and barriers associated with motivation and participation in their PE classroom. They used sticky notes and an affinity diagram to facilitate this. The major barriers they identified were: ‘inappropriate behaviour’, ‘boring classes’, ‘lack of student choice’, ‘the weather’ and ‘wasting time’.

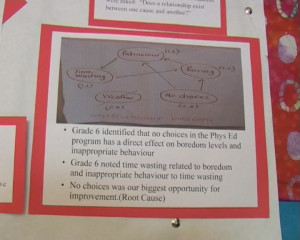

PDSA storyboard extract: affinity diagram of the barriers to student motivation and participation in PE - These barriers were analysed to agree the root causes using an interrelationship digraph. (They knew that by working on the root causes of their problem that they would realise the greatest return on their improvement efforts.) For the PE students this revealed ‘lack of choice’ as the major or root cause. A lack of choice by students in their PE lessons was seen as a major barrier to participation and motivation. It was impacting upon the other causes and driving the observed problems with behaviour and performance in their classroom.

PDSA storyboard extract: interrelationship digraph analysing the root causes of a lack of student motivation and participation in PE - A bone diagram was used with students to further explore the current situation, and to agree a vision of excellence for PE – what they wanted PE to be like. The resulting student vision showed students believed: student choice, a clear purpose and process for each session, appropriate behaviour, more minor games, a mix of skills, effective use of time, student’s understanding what was expected, and knowing whether they were improving; were the key characteristics students believed were essential for a great PE lesson.

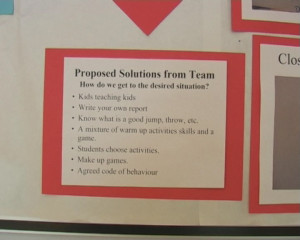

PDSA storyboard extract: bone diagram agreeing a vision of excellence for PE - They brainstormed possible solutions which included: ‘kids teaching kids’, students ‘choosing activities’ and ‘writing their own report’, agreeing a student ‘code of behaviour’, clarifying expectations (quality criteria: ‘know what a good throw, jump looks like’), and students ‘making up games’.

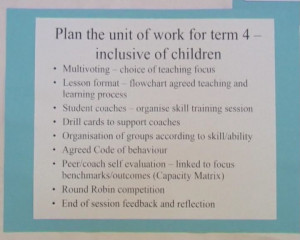

PDSA storyboard extract: brainstorm of possible solutions to improve PE - These solutions helped them to develop a ‘theory for improvement’ comprising the following key strategies:

- multi-voting to agree the focus of each lesson

- agreeing the lesson format – flowcharting the teaching and learning process

- appointing student skill coaches and documenting skill cards to help the coaches do their job

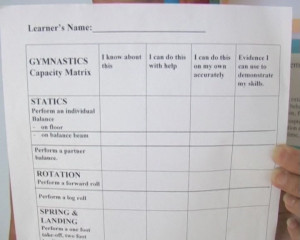

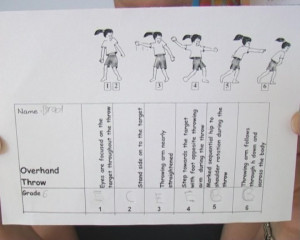

- students undertaking peer evaluation together with their teacher/coach. They developed capacity matrices for key areas of learning to help them to do this. They also documented quality criteria describing how to execute essential skills with a high degree of excellence (e.g. how to do an overhand throw). Students used the capacity matrices and quality criteria as the basis for reflection and evaluating their progress in PE

- agreeing a code of behaviour

- everyone reflecting and giving feedback after each lesson.

The outcome?

The PE classes applied the agreed strategies and new processes, and a few weeks later reflected on the effectiveness of the improvements they had made (the ‘study’ phase of the PDSA

process).

- Behaviour and engagement improved. Students were motivated and learning

- Students ‘owned’ and were running the PE lessons with minimal guidance from PE teachers! They were responsible for their learning

- PE lessons had a productive ’buzz’! Students were excited. Teachers were happy.

The processes they had developed together were adopted as the new way for PE lessons.

Chris described the PDSA based collaborative process as having an amazing impact.

Applying the PDSA process, working ‘with the kids’ and not ‘doing to the kids’, brought about significant positive change to PE lessons – improving the way teachers were teaching and students were learning – to great effect!

Learn more…

Download the detailed 9-step PDSA poster.

Purchase IMPROVING LEARNING: A how-to guide for schools, to learn more about the quality improvement philosophy and methods.

Purchase our learning and improvement guide: PDSA Improvement Cycle.

Watch a video of PDSA applied to year one writing.

Watch a video of PDSA applied within a multi-age primary classroom.

Watch a video about student teams applying PDSA to school improvement.